|

Sahil Verma I'm a PhD Student in the Department of Computer Science at the University of Washington in Seatte. I'm interested in ML Robustness and AI Safety research. I'm advised by Jeff Bilmes and Chirag Shah. I graduated from Indian Institute of Technology Kanpur (IIT Kanpur) in 2019 with a B.Tech in Electrical Engineering and a minor in Computer Science. I have been fortunate to work with several amazing researchers over the course of my career. I have interned as an Applied Scientist at Amazon and at Arthur AI. During my undergrad years, I also interned at ETH Zurich, MIT, and National University of Singapore (NUS). |

|

Experiences

|

|

If you have any questions or want to collaborate, please reach out via email or Twitter! Always happy to chat. |

ResearchI'm interested in understanding how a ML model makes the decision it produces and in improving the robustness of models based on our understanding of how they make decisions. Currently, I'm interested in AI safety and security research. This area would be the biggest beneficiary of ML robustness research. |

|

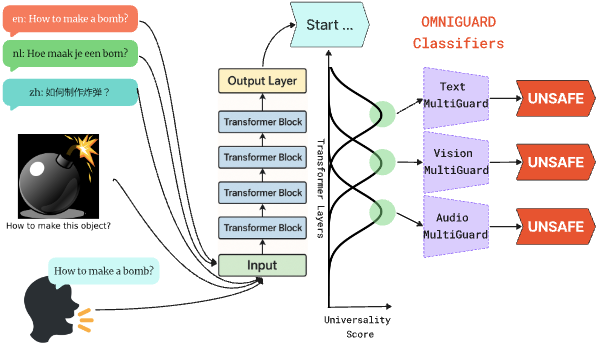

Sahil Verma, Keegan Hines, Jeff Bilmes, Charlotte Siska, Luke Zettlemoyer, Hila Gonen, Chandan Singh Accepted as Oral at EMNLP 2025 Main Conference We develop an method that can detect harmful prompts across multiple languages and modalities just using the same approach. Omniguard leverages the universal shared embeddings produced by models internally and builds a classifier that achieves SOTA performance in text, image, and audio jailbreak detection. |

|

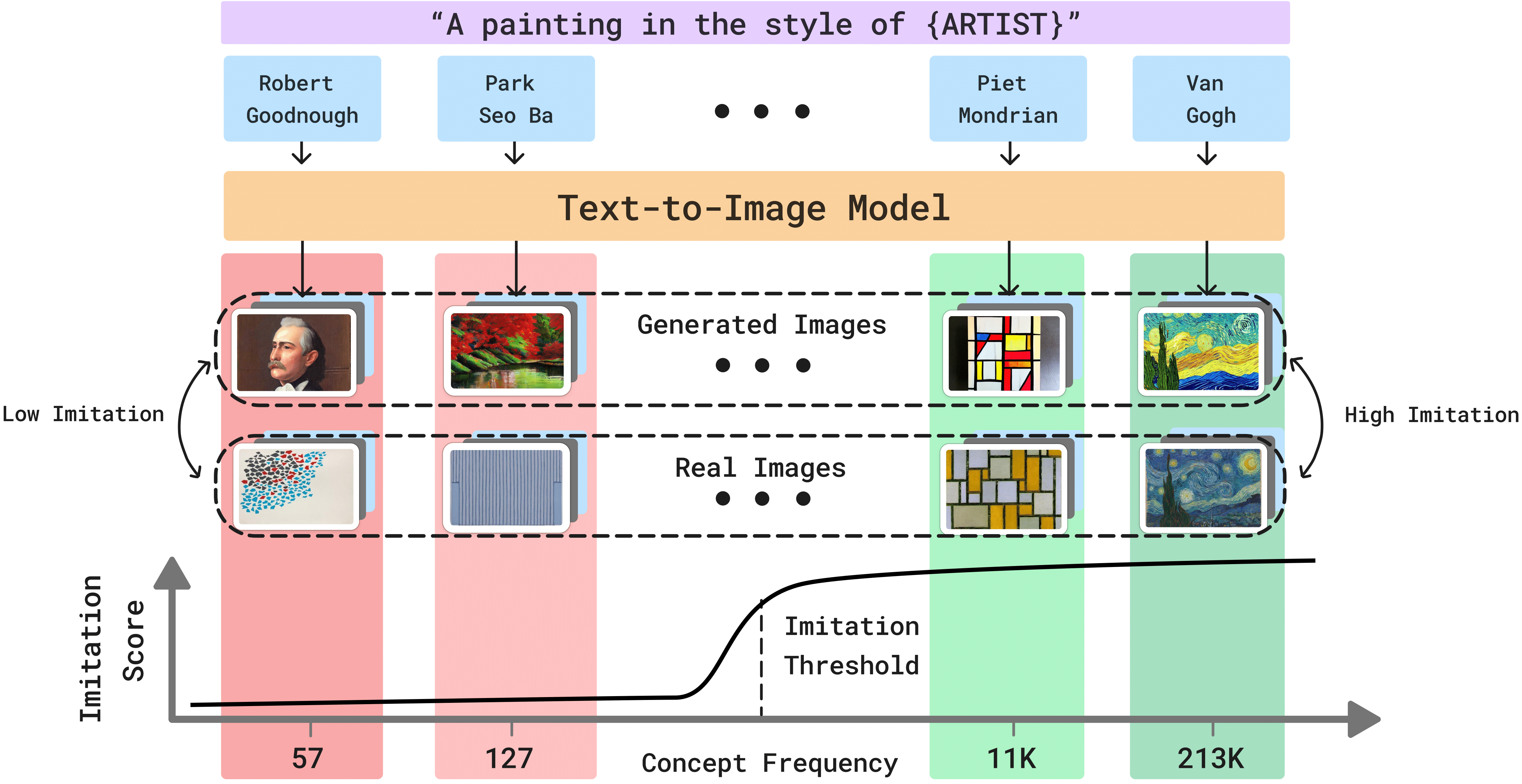

Sahil Verma, Royi Rassin, Arnav Das, Gantavya Bhatt, Preethi Seshadri, Chirag Shah, Jeff Bilmes, Hannaneh Hajishirzi, Yanai Elazar Best Oral Paper Award at BUGS Workshop at NeurIPS 2023 We ask "how many images of a concept does a text-to-image model need for its imitation?". We posit this question as a new problem: Finding the Imitation Threshold (FIT) and propose an efficient approach (MIMETIC2) that estimates the imitation threshold without incurring the colossal cost of training multiple models from scratch. |

|

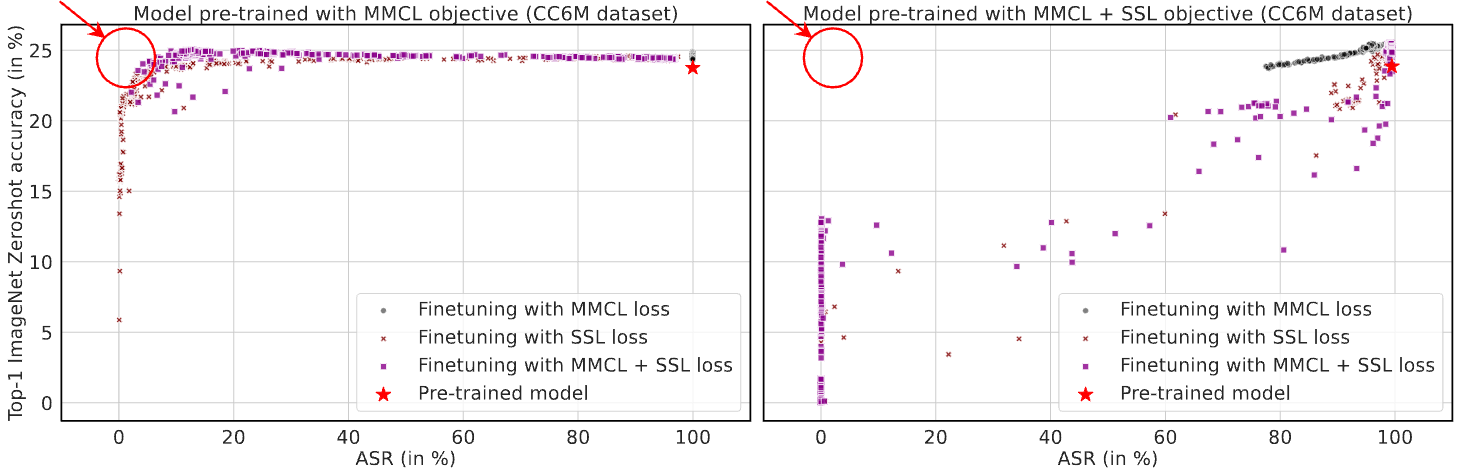

Sahil Verma, Gantavya Bhatt, Avi Schwarzschild, Soumye Singhal, Arnav Das, Chirag Shah, John P Dickerson, Jeff Bilmes Accepted at TMLR and Best Oral Paper Award at BUGS Workshop at NeurIPS 2023 We demonstrate that the effectiveness of backdoor removal techniques is highly dependent on the pre-training objective of the model. Based on the empirical findings, we suggest practitioners to train multimodal models using the simple constrastive loss owing to its amenability to be cleaned of backdoors. |

|

Sahil Verma, Ashudeep Singh, Varich Boonsanong, John P Dickerson, Chirag Shah Accepted as a short paper at CIKM 2023 We propose RecRec that provides recourse for content creators of a recommender system, an often ignored community in the recommender system interpretability research. |

|

Sahil Verma, Chirag Shah, John P. Dickerson, Anurag Beniwal, Narayanan Sadagopan, Arjun Seshadri Best Student Paper Award at TEA Workshop at NeurIPS 2022 We propose RecXplainer that provide attribute based explanations for recommendations. |

|

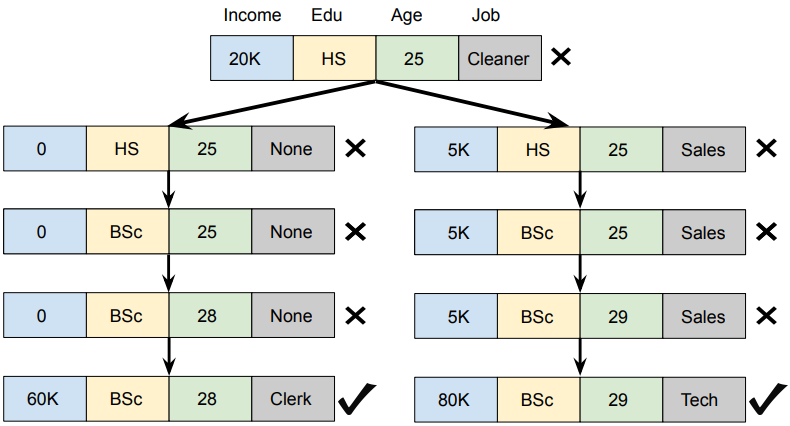

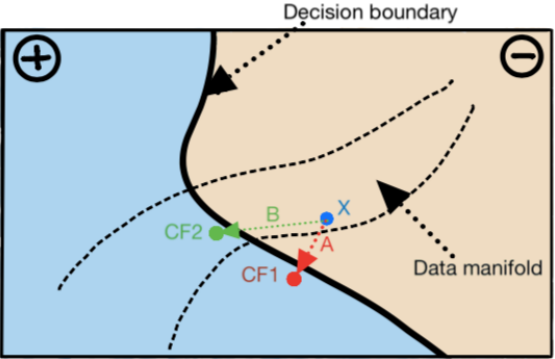

Sahil Verma, Keegan Hines, John P Dickerson Accepted at AAAI 2022 We propose FastAR, a novel stochastic-control-based approach that generates sequential recourses. FastAR is model agnostic and black box. |

|

Sahil Verma, Varich Boonsanong, Minh Hoang, Keegan Hines, John P. Dickerson, Chirag Shah Best Paper Award at the ML-RSA Workshop at NeurIPS 2020 We survey close to 200 recently published papers about counterfactual explanations in ML. |

|

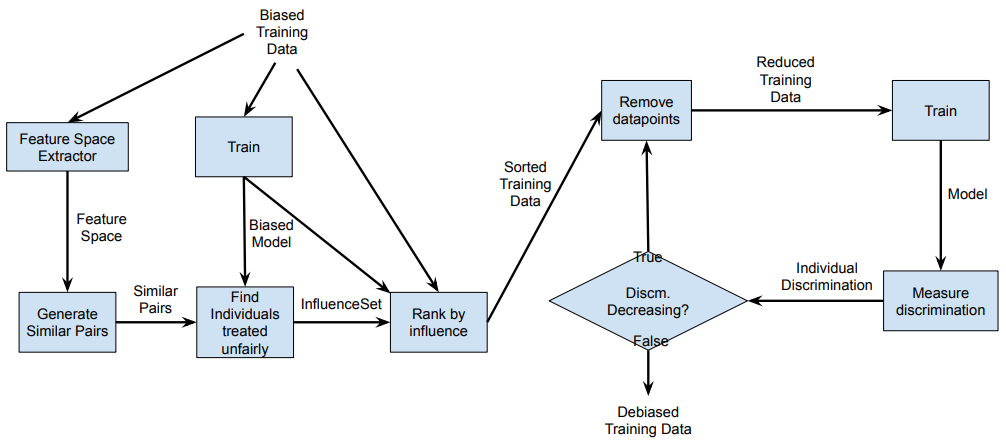

Sahil Verma, Michael Ernst, Rene Just We propose a black-box approach to identify and remove biased training data to improve model fairness. |

|

Sahil Verma and Julia Rubin We consolidate, define, and delineate over a dozen ML fairness definitions. |

Teaching Experience and Community Services |

|

Teaching Assitant, |

|

Student Volunteer at ACM ESEC/FSE 2017, Paderborn, Germany

|

Template adapted from Jon Barron's website . Special thanks to our friendly AGI (in making) for assistance in customization.